What you’re researching? How you’re researching it? & Why it is important to research this subject?

My task for explore the current dominant rendering paradigm and the possibilities of future rendering methods. I will look through some popular software’s user help documents and test them for grabbing different information.

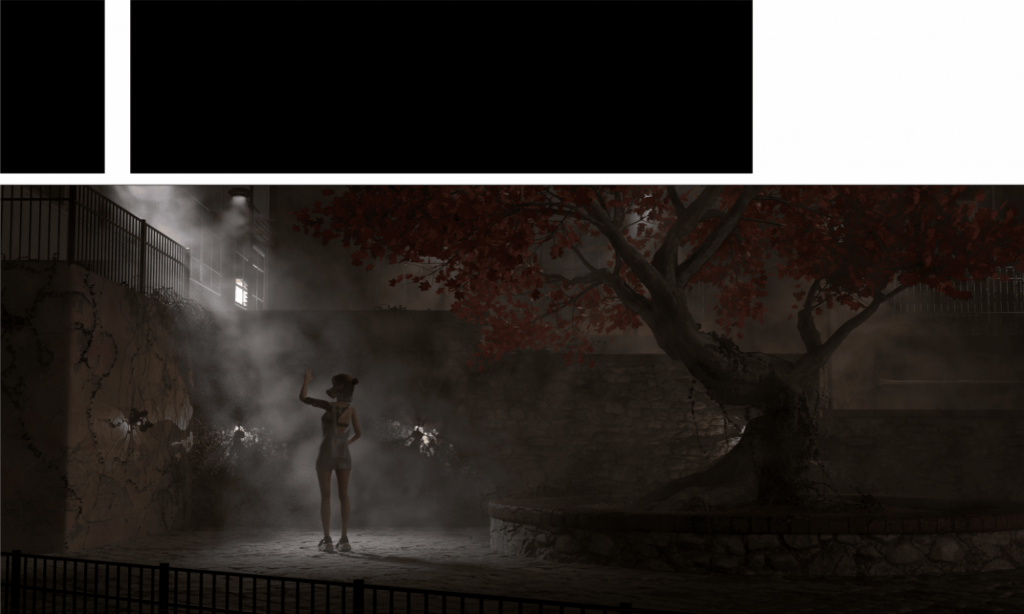

With the development of the metaverse, there is a growing demand for rendering. In the traditional film industry, practitioners can spend hours rendering a single image, but such efficiency is clearly not enough for a completely digital world. In the traditional games industry, rendering efficiency has increased dramatically but accuracy is often difficult to achieve. The quality of the image and the time taken to render it gradually become irreconcilable.

Provide at least 5 keywords

rendering / model / texture / light / algorithm

render:The computer needs to integrate all the resources to present them on the display in pixels. The efficient integration of resources and fast processing of the images became a major challenge.

model: The processing of the surface of the model is also very important in the rendering process.Efficient handling of complex models can speed up the rendering process.

texture : In order to reduce computer resources and save time, the advent of texture systems has significantly increased the efficiency and reduced the difficulty of rendering.

light : Calculating the representation of light takes up most of the rendering time, almost as if the rendered image were a rendered light.

algorithm :Quality algorithms can significantly improve the efficiency of rendering or even disrupt the rendering process.

Provide at least four sources, debates, or texts on the subject area and a short explanation of the relevance to your project proposal.

https://docs.unrealengine.com/4.26/en-US/RenderingAndGraphics/RayTracing/

https://www.cnblogs.com/KillerAery/p/15106773.html

https://blogs.nvidia.com/blog/2018/03/19/whats-difference-between-ray-tracing-rasterization/

https://medium.com/@junyingw/future-of-gaming-rasterization-vs-ray-tracing-vs-path-tracing-32b334510f1f

https://www.autodesk.co.uk/products/arnold/features

The official documentation from NVIDIA and Autodesk does a good job of laying out the cutting edge technology of the moment. Some of the blogs and documents posted by practitioners give a good indication of the history of rendering technologies and how they are intrinsically linked. The official documentation from NVIDIA and Autodesk does a good job of laying out the cutting edge technology of the moment. Some of the blogs and documents posted by practitioners give a good indication of the history of rendering technologies and how they are intrinsically linked